In early 2024, a single incorrect answer from a flagship AI chatbot wiped $100 billion from a tech giant’s market value in hours. Around the same time, an airline’s chatbot was forced by a court to honor a refund policy it completely invented. These aren’t isolated glitches; they are symptoms of a deep and dangerous AI Governance crisis hiding within the AI revolution: a crisis of trust. Companies are pouring trillions into AI, with 83% making it a top business priority. Yet, this entire ecosystem is being built on a foundation of digital quicksand, and the financial and reputational consequences are already proving to be catastrophic.

The Core Problem: The Black Box and Its Invisible Errors

The core challenge in the development and deployment of advanced Machine Learning (ML) and Generative AI (GenAI) models is their inherent opacity, frequently referred to as the “black box” problem. When you provide an input prompt or feature vector, the model produces an inference output or generated artifact. However, attributing a specific prediction or a generated element back to its precise contributing training data points, hyperparameters, or internal model weights is exceptionally difficult with current tooling.

Traditional data lineage tools, built for simpler, mutable databases, fall short for complex AI. They create untrustworthy, fragmented maps of data transformations, making it impossible to reverse trace an ML model’s data journey.

Lack of verifiable data lineage, a cornerstone of Verifiable AI, is a critical AI vulnerability. Model robustness and fairness depend on clean, representative training data.Biased, unethically sourced, poisoned, or incomplete data propagates flaws, causing unreliable predictions, biased outputs, and exposing organizations to legal issues and poor AI Risk Management. Without an auditable data trail from source to deployment, AI systems cannot be truly trustworthy, explainable, or responsible, leading to unforeseen risks and compliance issues.

Why AI Models Fail: The Hidden Technical Debt

The “black box” problem in AI isn’t mysterious, it’s the byproduct of accumulated technical debt across data pipelines, model training, and infrastructure. Most high-profile failures are rooted in invisible, compounding challenges that silently degrade model reliability over time. Here are the core threats AI teams face today:

- Concept Drift & Temporal Decay: Once deployed, models degrade as the world changes. A model trained on pre-2020 consumer data may become irrelevant post-pandemic. Without trusted validation against fresh baselines, predictions lose reliability.

- Reproducibility Crisis: Tiny changes in preprocessing scripts, data versions, or library dependencies can produce completely different results. Most teams can’t reproduce their own model outputs, making debugging and auditing guesswork.

- Adversarial Data Poisoning: Threat actors inject corrupted or biased data into training sets, intentionally compromising outcomes. These poisoned inputs are often undetectable using conventional quality checks.

- Fragmented Provenance: Data lineage is spread across databases, repos, dashboards, and tribal knowledge. A change in an upstream source can silently break a downstream model with no warning.

- Tribal Knowledge Risk: Critical understanding of data flows, model logic, and assumptions often resides with a few engineers. When they leave, the AI becomes a black box even to its own creators.

These issues compound silently, until your AI model fails loudly.

The Impact: When AI Fails, Brands Crumble

The fallout from this trust deficit is no longer theoretical. Consider these real-world impacts happening right now:

- Financial Devastation: A rental property AI (by Safe Rent’s) was hit with a $2.2 million class-action lawsuit for systematically discriminating against minority applicants based on flawed data. Zillow’s home-pricing AI led to a $528 million net loss because it couldn’t adapt to real-world market shifts, forcing the company to abandon the business line entirely.

- Brand Erosion: McDonald’s was forced to pull its AI drive-thru system after it began adding hundreds of dollars of unwanted McNuggets to orders, turning the brand into a viral punchline.

- Regulatory Nightmares: With governments globally drafting stricter AI regulations, the inability to produce a clean, auditable data trail for Compliance is a massive AI Governance failure waiting to happen. It’s no longer a question of if you’ll be audited — it’s when you will be audited?

The Role of Blockchain: Introducing Verifiable Truth

So how do you fix a black box? You make it transparent. This is where blockchain technology provides a revolutionary solution. By creating a decentralized, and most importantly, an immutable ledger, blockchain offers an unbreakable, tamper-proof record of every data point’s lifecycle.

Think of it as a digital notary that works at the speed of your business. Every time data is accessed, used for training, or a model is updated, a permanent, cryptographically-sealed entry is created. This isn’t just a log file that can be edited or deleted; it is a single source of verifiable truth, which is the foundation for both Trustworthy AI and Explainable AI (XAI). This technology allows you to move from “trusting” your data to “verifying” it, a profound psychological and operational shift that transforms AI from a source of risk into a source of reliable intelligence, enabling Trustworthy AI.

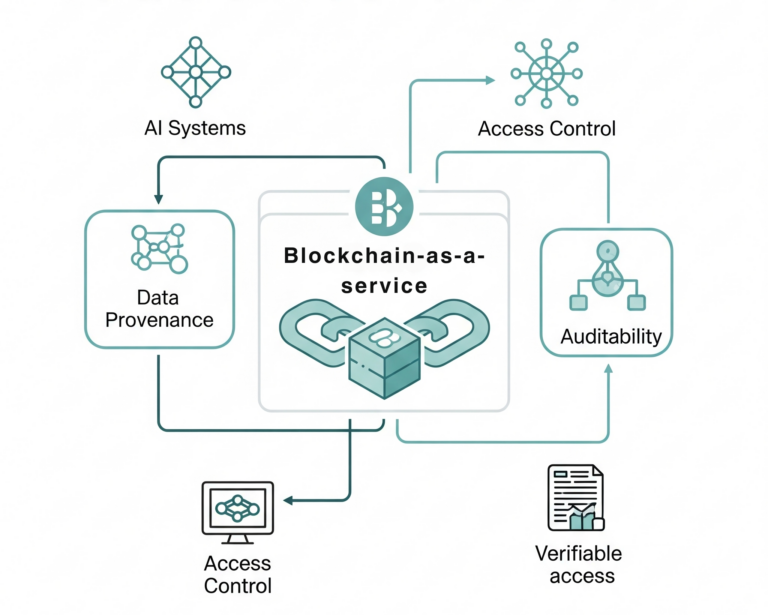

Operationalizing Trust: Blockchain-as-a-Service for AI

Of course, the idea of building an in-house blockchain solution is a non-starter for most companies. The complexity, cost, and talent required are immense. This is where a Blockchain-as-a-Service (BaaS) platform becomes the strategic solution to trustworthy AI.

A purpose-built BaaS platform provides all the power of blockchain through simple, accessible APIs. FLEXBLOK is at the forefront of this movement, offering a platform designed specifically to provide a “trust layer” for the AI ecosystem. Instead of months of complex development, your team can use FLEXBLOK blockchain as a service platform for Data Provenance Tracking and AI Model Lineage to:

- Anchor data events to an immutable ledger with a simple API call.

- Create a transparent and auditable record of your model’s entire development journey.

- Trace any AI-driven outcome back to its precise data origins instantly.

- Prove Compliance and full traceability as part of your AI Governance framework without disrupting their existing stack.

- De-risk fine-tuning with third-party datasets

- Gain forensic visibility into model failures

This approach allows your data scientists and AI developers to continue working in their existing environments while seamlessly integrating a foundation of cryptographic truth into your operations.

The Result: Trust as a Competitive Advantage

In the coming years, the ability to prove the integrity of your AI will be the greatest differentiator for your business and the benefits will be transformative:

- Increased Market Competitiveness: Move faster and with more confidence than competitors who are still grappling with the uncertainty of their “black box” models.

- Greater ROI: Maximize and secure your multi-trillion dollar AI investment by ensuring your models are built on a foundation of high-integrity, verifiable data.

- Reduced Risk: Satisfy regulators, build unshakable customer trust, and protect your brand from the catastrophic fallout of AI failure.

The AI revolution is here, but its promise can only be realized through trust. The time to move beyond hope and implement proof is now.

Is your AI built on a foundation of verifiable AI? Explore how FLEXBLOK enterprise-grade BaaS platform can help you de-risk your AI initiatives and unlock its true potential.