Artificial intelligence (AI) is no longer a niche technology. Generative models, predictive analytics and agentic systems are woven into financial services, healthcare, supply chains and public infrastructure. As adoption accelerates, regulators and the public are demanding responsible AI – systems that are fair, reliable, transparent and accountable. Responsible AI is now more than a buzzword; it is a governing philosophy. Research notes that only 35 % of consumers trust how companies deploy AI, and 77 % want organizations held accountable for misuse. This trust deficit has led policymakers to propose laws like the EU AI Act and updates to the General Data Protection Regulation (GDPR) that mandate records of AI decisions. One of the most pressing elements of these frameworks is decision attribution – being able to trace who (or what) made a particular AI decision, how it was reached and why it was acceptable.

For compliance officers and enterprise technology leaders, decision attribution has become a strategic priority. This blog explores the latest trends around responsible AI, explains how blockchain can be leveraged to enable robust decision attribution, highlights real-world examples and outlines practical guidance to build AI systems that are transparent, auditable and trustworthy.

Why decision attribution is gaining momentum

Growing regulatory pressure

Lawmakers are focusing on transparency and explainability. The EU AI Act requires high-risk AI systems to maintain logs detailing the decision-making process and to enable human oversight. Similarly, GDPR’s right to explanation demands that organizations provide understandable reasons for automated decisions. These requirements highlight the necessity of decision logs that capture inputs, models used and outputs.

Public and stakeholder trust

Consumer trust in AI lags behind adoption. A survey cited by the Blockchain Council shows that consumers want clear accountability mechanisms. Enterprises that cannot explain or justify AI outcomes risk reputational damage. AI decision attribution crises, situations where automated systems make controversial or discriminatory decisions without clear accountability, are now a recognized PR risk.

AI governance standards

Leading technology companies have introduced responsible AI standards centered on fairness, reliability, safety, privacy, inclusiveness, transparency and accountability. Beyond principles, researchers have proposed concrete technical mechanisms. A 2025 generative AI data governance framework recommends signed policy attestations, non‑repudiable governance logs and decision attribution linking outcomes to responsible entities. Transparent dashboards and explainable governance models (e.g., SHAP or LIME) help stakeholders understand enforcement decisions.

Complex agentic systems

Autonomous agents introduce new identity challenges. In distributed environments, agents often impersonate users to call downstream services, blurring accountability. Christian Posta illustrates that if a supply chain agent impersonates a user when placing an order, the user may be wrongly blamed; using delegation patterns allows the agent to clearly act on the user’s behalf and creates an audit trail showing who authorized the action. As agentic AI proliferates, robust identity management and delegation mechanisms are essential for decision attribution.

Challenges in achieving decision attribution

Several technical and organizational hurdles make decision attribution difficult:

- Opaque algorithms – Complex models like deep neural networks are often black boxes. Without explainability techniques, it is hard to reconstruct how a decision was made.

- Model lifecycle complexity – Data scientists choose variables, training data and model architectures. Without standardized documentation, it becomes impossible to trace decisions after staff turnover. FICO’s Scott Zoldi notes that historically, data scientists stored models in personal directories; when they left, organizations lost understanding of how models were built or what assumptions underpinned them.

- Data provenance – AI systems depend on data pipelines that may include third‑party sources. Tracking lineage from raw data through preprocessing to model input is non‑trivial.

- Regulatory fragmentation – Requirements differ across jurisdictions. Balancing GDPR’s right to erasure with blockchain’s immutability, for instance, demands careful design.

Addressing these challenges requires a combination of governance processes and enabling technologies. Blockchain is emerging as a foundational platform for decision attribution because of its immutability, transparency and decentralization.

How blockchain enables decision attribution

Blockchain is best known as the technology underlying cryptocurrencies, but its core properties, immutable ledgers, cryptographic signatures and decentralized consensus, make it a powerful tool for AI governance. A World Bank blog summarizes four key features: immutability (data cannot be modified without leaving a trail); transparency (authorized users share real‑time data); non-repudiation (digital signatures prevent parties from denying their actions); and traceability (transfers can be traced back to origin, purpose and beneficiary). These properties address many of the decision attribution challenges described above.

Recording decision events on-chain

Researchers propose capturing AI decisions as blockchain transactions. Each inference logs key input parameters, the model version and the resulting output to an immutable ledger. A 2024 MDPI study describes a framework where IoT devices sign and submit decision records to a permissioned blockchain. This creates a tamper-resistant audit trail that regulators and users can verify. The framework was tested in healthcare and industrial IoT scenarios, demonstrating improved accountability. The same study notes that regulators demand explainability and that blockchain’s decentralized ledger ensures once recorded, data cannot be altered. An AI decision provenance log that records inputs, outputs and model metadata allows investigators to reconstruct the decision path.

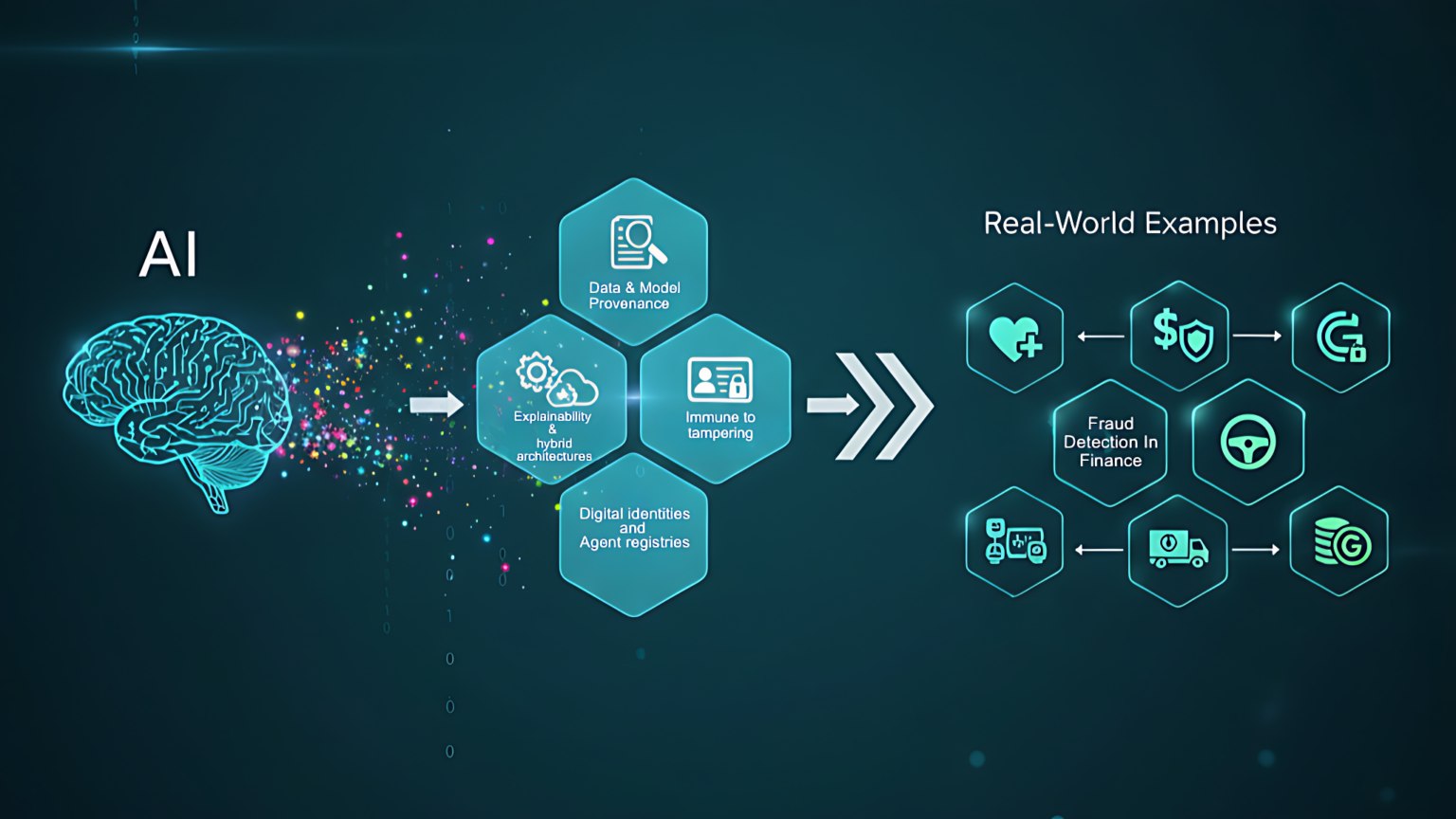

Data and model provenance

Blockchain can track the entire AI model lifecycle. FICO’s patented approach uses a blockchain ledger to record every decision made about a machine‑learning model: variables chosen, model design, training and test data, feature selection and the scientists involved. By codifying roles and tasks on-chain, organizations enforce a corporate model development standard; the ledger acts as a contract specifying objectives, algorithms allowed, training data requirements, ethics and robustness tests and success criteria. Before any model is released, the blockchain verifies that all requirements have been met. External auditors can review the ledger to confirm that variables were reviewed and approved, and trace specific variables across models. Blockchain therefore brings end‑to‑end transparency and accountability to the model development process, reducing bias and “hallucinations”.

Explainability and hybrid architectures

Blockchain alone does not explain decisions. It must be combined with explainability frameworks. An arXiv paper suggests using smart contracts to log model inputs, parameters and outputs, while storing SHAP or LIME explanations on-chain or off-chain. The paper notes that different blockchain architectures suit different requirements: Ethereum offers sophisticated smart contracts; Hyperledger Fabric provides permissioned ledgers; Corda excels at privacy-preserving transactions. Combining blockchain with explainability tools yields transparent AI pipelines and meets regulatory requirements.

Immune to tampering and aligned with regulations

Applying blockchain to AI governance increases trust because records are immutable and auditable. The same paper argues that blockchain helps organizations comply with GDPR and other regulations by providing verifiable records of every step in the decision-making process. The INATBA report on AI–blockchain convergence further explains that blockchain’s cryptographic hashes and time stamping guarantee tamper-resistant data records and audit series. Digital signatures verify authenticity and maintain chronological order, and linking blockchain transactions to off-chain data allows auditors to verify computations without exposing sensitive information. Permissioned blockchains offer controlled access for auditors and regulators, while smart contracts automate compliance checks such as enforcing data retention policies.

Digital identities and agent registries

The ETHOS framework for AI agents uses blockchain and self‑sovereign identity (SSI) to assign unique identifiers and compliance credentials to each agent. Decision events, performance metrics and bias mitigation evidence are logged on-chain via soulbound tokens (non-transferable tokens representing credentials). Governance actions (e.g., model updates or policy changes) are recorded as transactions in a decentralized autonomous organization (DAO), creating an immutable audit trail. This ensures that each agent’s actions can be attributed to a specific identity and governance context.

Real-world examples of blockchain-enabled decision attribution

Blockchain-based audit trails are no longer theoretical. Various industries are piloting or deploying solutions:

- Healthcare diagnostics – In medical AI, trust is paramount. The arXiv paper reports prototypes where diagnostic decisions and associated data are logged on a permissioned blockchain. This provides an immutable record that clinicians and regulators can review if a misdiagnosis occurs.

- Fraud detection in finance – Financial institutions use blockchain to secure transaction data and AI fraud detection models, ensuring that both transaction records and AI decisions are traceable. FICO’s model governance ledger, described earlier, is a real-world implementation in credit scoring and risk management

- Autonomous vehicles – Companies like Bosch and IBM have explored blockchain-based data logging for autonomous vehicles. When an accident occurs, the recorded sensor data and AI decisions can be audited

- Public financial management – A World Bank pilot project, FundsChain, records public project expenditures on a blockchain to ensure transparency and traceability; the same principles apply to AI-driven resource allocation.

- Supply chain agents – Agentic systems manage procurement and logistics. Delegation patterns and on-chain identities ensure that orders placed by AI agents can be traced back to the human who authorized them

- Generative AI data governance – The generative AI governance framework suggests storing policy attestations and governance logs on blockchain. Hashing training data and logging model versions ensures verifiable lineage

Strategic guidance for enterprises and compliance teams

1. Build a robust governance framework

Adopt a layered approach combining policies, human oversight and technical controls. Responsible AI frameworks like Microsoft emphasize fairness, reliability, safety, privacy and transparency (blockchain-council.org). Complement high-level principles with operational processes: pre-deployment assessments, continuous monitoring and bias mitigation techniques (e.g., demographic representation policies, balance monitoring, bias circuit breakers). Human oversight should be tiered with escalation paths for complex scenarios (journalwjarr.com).

2. Leverage blockchain selectively

Not every aspect of AI needs to be on-chain. Use blockchain where immutability and provenance are essential: recording model development decisions, logging AI inference events and storing digital identities. Consider hybrid architectures that store sensitive data off-chain while recording hashes and pointers on-chain. Evaluate whether a permissioned network (e.g., Hyperledger) suits enterprise needs or whether a public network with privacy layers is necessary (arxiv.org).

3. Integrate explainability tools

Combine blockchain with explainable AI methods. Log SHAP or LIME scores alongside decision events so that auditors can see not only what happened but also why. Ensure explanations are understandable to non-technical stakeholders; dashboards and exception reporting help executives and regulators interpret AI behaviour.

4. Address privacy and scalability concerns

Blockchain’s immutability conflicts with GDPR’s right to erasure. Use techniques such as storing only hashes or encrypted pointers on-chain, with actual data stored off-chain and deletable. Consider zero-knowledge proofs for verifying decisions without revealing sensitive data. Assess scalability; some blockchains cannot handle the volume of transactions generated by high-frequency AI systems. Layer‑two scaling solutions or periodic batch recording may help.

Looking ahead: From compliance to competitive advantage

Decision attribution in AI is not just a regulatory checkbox; it is a source of competitive advantage. Transparent systems foster trust with customers and regulators, reduce the risk of costly litigation and improve the quality of AI models by exposing hidden biases and errors. Blockchain provides the technical backbone for immutable and auditable AI systems. By capturing every step of model development and every inference event, enterprises can prove compliance, demonstrate ethical practices and build resilient AI pipelines.

Enterprise leaders should view decision attribution as an investment in the future. As generative AI and autonomous agents continue to transform industries, those who embed accountability into their systems will be better positioned to innovate safely. Blockchain, when combined with rigorous governance, explainability and human oversight, offers a path toward responsible AI that is auditable and trustworthy.

Conclusion: Charting the path with FLEXBLOK

Building responsible AI with decision attribution requires both vision and technical execution. A blockchain platform that is designed for enterprise use can accelerate implementation. FLEXBLOK.io offers blockchain‑as‑a‑service capabilities tailored for AI governance. Its permissioned ledgers, smart contract templates and integration toolkits can help your organization record model lifecycle events, log AI decisions and manage digital identities without building infrastructure from scratch. By partnering with a platform like FLEXBLOK, compliance officers and C‑suite executives can not only meet regulatory requirements but also demonstrate to stakeholders that AI decisions are transparent, auditable and aligned with ethical principles. In a world where trust is the currency of innovation, leveraging blockchain for decision attribution may be the difference between being a leader and being left behind.